WYSK: 07/29/22

This Week: OGT: ; 1. AI Philosophers; 2. Meta's Woes; 3. DNA investigation; 4. Work Ethic

What you should know from the week of 07/29/22:

- One Good Thing: Pizza heroism.

- AI Philosophers: Manufacturing ethics loopholes in AI research;

- Meta's Woes: Compounding failures cause massive drop in Facebook/Meta stock, reflecting the trust in the company;

- DNA investigation: DNA collected for health screenings use in criminal prosecution;

- Work Ethic: Chasing excellence at Stripe, and finding meaning outside "me."

One Good Thing:

This week a pizza delivery driver driver saved a bunch of kids from a house fire.

Because of the dense smoke, he said his only option was to exit through a second-floor window. Bostic punched out the glass and jumped to safety with the 6-year-old girl in his arms. He suffered multiple injuries but the girl only suffered a minor cut to her foot.

We're awash in news of violence (largely driven by ballooning media coverage rather than ballooning incidents), but there are regular citizens heroically saving others (see Kentucky as well).

While we shouldn't ignore the villainy, we should spend more of our emotional energy thinking on the heroism.

AI Philosophers:

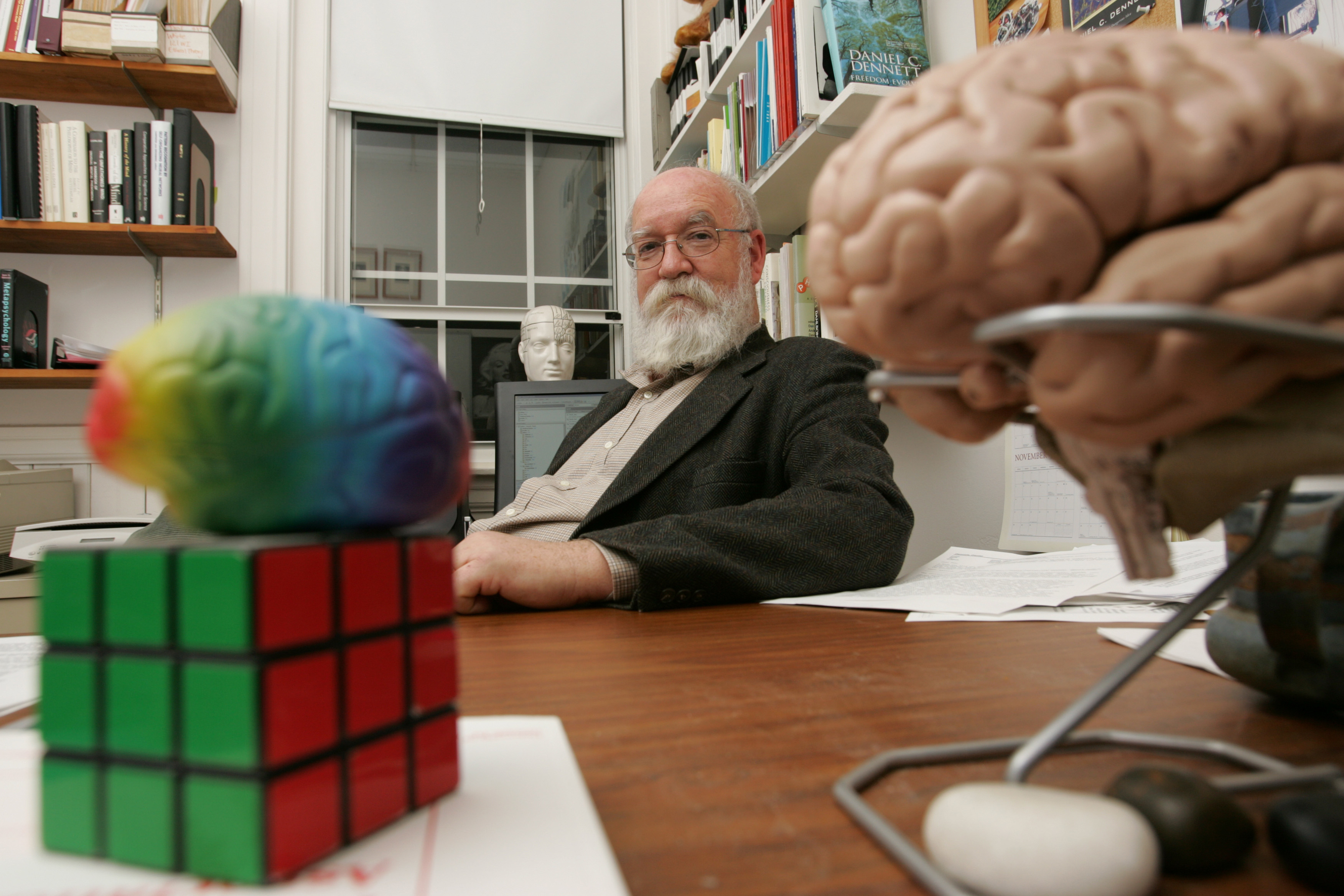

An interesting article this week from Shayla Love in VICE about using AI models to mimic real people's writing:

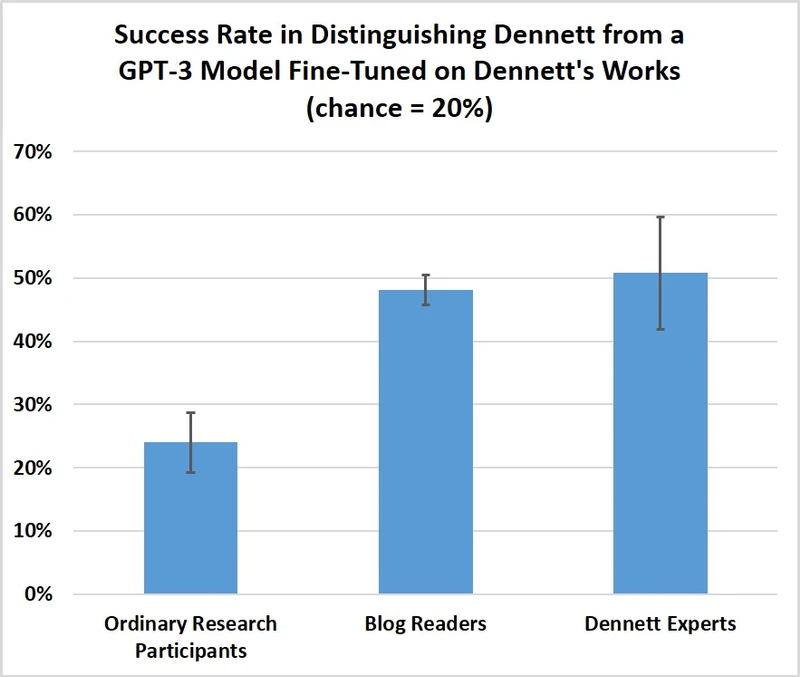

A recent experiment from the philosophers Eric Schwitzgebel, Anna Strasser, and Matthew Crosby quizzed people on whether they could tell which answers to deep philosophical questions came from [philosopher Daniel] Dennett and which from [AI model] GPT-3. The questions covered topics like...“Do human beings have free will?” and “Do dogs and chimpanzees feel pain?”...

But the reporting is made truly fascinating in conjunction with a tweet from another researcher:

In Stochastic Parrots, we referred to attempts to mimic human behavior as a bright line in ethical AI development" (I'm pretty sure that pt was due to @mmitchell_ai but we all gladly signed off!) This particular instance was done carefully, however >>

The experiment itself showed that participants ranging from 'ordinary people' to experts in Daniel Dennett's work were unable to perfectly distinguish between the AI-generated and the authentic responses. Experts were usually able to correctly identify the real answers, but ordinary participants guesses were slightly better than random chance:

There are two very interesting takeaways for me in this experiment:

AI ethics are proving pretty malleable:

The Stochastic Parrots research says "Work on synthetic human behavior is a bright line in ethical AI development..." (Merriam-Webster defines 'bright line' as "providing an unambiguous criterion or guideline especially in law"). Yet the same author that called synthetic human behavior a bright line in 2021 applauds such work just a year later.

As we talk about ethics in AI, this is huge. AI is so interesting and profitable that ethics tend to become malleable under the heat of curiosity and incentive. Benders' about-face is a perfect example of the Special Pleading fallacy: 'This kind of work is clearly unethical, but not in this one special case where people were really careful.'

Researchers' willingness to redefine ethics because of extenuating circumstances is very dangerous in this burgeoning field.

Obligatory Jurassic Park quote:

In this case it should probably be modified as: "Your scientists knew they shouldn't but really wanted to know if they could, and so figured that they would just be extra careful."

AI-generated responses are convincing when the answer isn't clear:

The danger is that we don’t know what’s made up and what’s not. If Scheutz asked GPT-3 a question he himself didn’t know the answer to, how could he assess if the answer is correct?

AI that merely provides us answers that we already know is obviously not very useful outside of limited research applications. The ultimate goal is always to develop AI that can make conclusions that would be prohibitively resource-intensive to identify through alternate means. That is, to have AI solve problems we don't know the answer to.

While hard-sciences are also vulnerable, the soft-sciences that shape society are intrinsically more vulnerable to suggestion from GTP-3-esque models. Obligatory XKCD:

Meta's Woes

Alright, this article is not actually from this week—it is from February 2022—but it was a reader request and very timely given some other news that did break this week.

When Kif Leswing wrote this article, Facebook was bellyaching about Apple's privacy-enhancing changes to iOS:

Facebook said on Wednesday that Apple’s App Tracking Transparency feature would decrease the company’s 2022 sales by about $10 billion.

...

In December 2020, Facebook ran a marketing campaign including full-page ads in major newspapers blasting the feature and saying that the change was about “profit, not privacy.”

Of course Apple made this change out of a desire to improve its competitive market position, not just out of some pure desire to improve consumer protections. However, Apple's decision aligns with consumer interests. Despite the ad industries monotonous claim that consumers like ads because of the customization they get to see, iPhone users generally chose privacy over customization:

A study from ad measurement firm AppsFlyer in October suggested that 62% of iPhone users were choosing to opt-out of sharing their IDFA [a device ID that’s used to target and measure the effectiveness of online ads].

Investors were not happy with the announcement, however, I believe that Facebook is spinning the impact of Apple's privacy changes to hide some key failures. it is a convenient scapegoat for a company that is growing steadily less alluring and more toxic:

Meta shares sank 23% in extended trading on Wednesday after the company warned about numerous challenges and came up short on user numbers.

As stated clearly in Facebook's fourth-biggest quote from 2021, Facebook's profitability is tied to how much time consumers spend on Facebook's products.

But now TikTok is sucking up the consumer eyeballs that used to be trained on Facebook while the legacy platform is increasingly associated with 'racist uncles,' QAnon conspiracies, 'boomers,' data scandals, Russian troll farms, 'cancel culture' and censorship, genocide, and livestreamed shootings. Vilified by both Left and Right and unilaterally led by a deeply unsympathetic CEO (roll 0 for charisma), Facebook is beginning to really struggle.

Not only did Facebook "come up short on user numbers" in early 2022, but it just reported a drop in revenue for the first time ever:

Facebook parent Meta was hit with a double whammy in the last three months: revenue fell for the first time ever and profit shrank for the third straight quarter, amid growing competition from TikTok and nervousness from advertisers.

In response to these combined failures, Facebook/Meta's stock is actually down 6% over the last five years. Six percent! To put that in perspective, Apple's stock is up over 300% in that time, and the S&P 500 is up 66%. Even beleaguered Twitter is up 41% from 5 years ago.

Last year the company rebranded to "Meta platforms" in an attempt to futureproof the company. IF Meta succeeds it will have massive influence on consumers, but it is appearing more likely that the company may succumb to its losses before realizing its full vision (this unfortunately wouldn't neutralize the dangers of the metaverse that many companies are envisioning and working toward).

DNA investigation:

Wild reporting on privacy and civil liberties from Corin Faife in The Verge:

According to a lawsuit filed by the New Jersey Office of the Public Defender (OPD), ...New Jersey State Police successfully subpoenaed a testing lab for a blood sample drawn from a child. Police then performed DNA analysis on the blood sample that reportedly linked the child’s father to a crime committed more than 25 years ago.

"All babies born in the state of New Jersey are required to have a blood sample drawn within 48 hours as part of a mandatory testing program that screens them for 60 different disorders." And while the police don't have direct access to the blood sample database, the ability to subpoena the database gives police access to that information. Two key takeaways from this:

Data outlives the agreements it was collected under:

As we develop more ways to extract insights from data, the value of some data continues to grow. Collecting and storing data is essentially an investment.

Just as fracking becomes economically viable as an oil-extraction method once oil prices are high enough, more-intrusive analysis of data becomes tempting as we the accretion of data and advances in data analytics allow the extraction of new value from datasets.

And those new conditions are created after the data is collected.

This kind of trust-violating behavior harms society:

By using this data for new purposes, what was previously an easy healthcare decision that benefitted a child becomes a more complex decision. At some point in the future will my child be prohibited from certain jobs because of some AI-driven pseudo-scientific assertions that they have certain predispositions to crime, or will healthcare be more expensive because they are genetically predisposed to smoking?

Work Ethic:

Brie Wolfson writes about work culture at Stripe back when it was a startup.

It's a really interesting article that walks through the passion and investment of working at Stripe, versus the bland acceptance of other organizations. See the difference:

Stripe:

My work was meticulously but warmly critiqued by my peers and leaders alike, and my work got better and better because of it... It felt like magic, but there was deep thought, care, and intention behind everything. I had a tingly feeling that I was part of an organization that had cracked something about creating a great culture.

Other Startup:

A few months ago, someone complained to me that the new (very hot stuff) startup they were at had a “lgtm culture.” Upon inquiry, they explained that no matter what they do or how good it is, everyone just says “looks good to me.” “I know I should feel good about being a competent, trusted, contributing team member,” he continued, “and my new colleagues are so, so kind, but at the end of the day I just feel like no one has any standards.” He looked down at his coffee for a moment. “I’m afraid I’m never going to see my best work again.”

Ok, the WRONG takeaway is that there is an inverse correlation between kind colleagues and high-quality work. Note that Wolfson's peers critiqued her work warmly and supportively, and I'd argue that the colleague's of her friend at the other startup were not so much kind as indulgent.

Rather, I think Wolfson found something exceedingly rare at Stripe: a culture devoted to something greater than "me."

I don't think work is the best and highest place for this kind of culture, but it is an excellent thing to find anywhere. And it is especially rare in a tech industry that is devoted to pampering the wants and desires of the individual (personal laundry service, free food, etc.) and a job-hopping culture designed solely around maximizing personal benefits. To be clear, neither being paid well nor switching jobs is wrong, but chasing those elements instead of excellent performance will prove empty and meaningless.

C.S. Lewis describes an element of this in his tremendous "Inner Ring" essay:

The quest of the Inner Ring will break your hearts unless you break it. But if you break it, a surprising result will follow. If in your working hours you make the work your end, you will presently find yourself all unawares inside the only circle in your profession that really matters. You will be one of the sound craftsmen, and other sound craftsmen will know it. This group of craftsmen will by no means coincide with the Inner Ring or the Important People or the People in the Know. It will not shape that professional policy or work up that professional influence which fights for the profession as a whole against the public: nor will it lead to those periodic scandals and crises which the Inner Ring produces. But it will do those things which that profession exists to do and will in the long run be responsible for all the respect which that profession in fact enjoys and which the speeches and advertisements cannot maintain.

Interest piqued? Disagree? Reach out to me at TwelveTablesBlog [at] protonmail.com with your thoughts.

Photo by Tamara Gak on Unsplash